The Robotic Sense We’ve Been Missing

Inside the push to give robots a human-like sense of touch—and why Amazon’s Vulcan marks a pivotal breakthrough.

Aaron’s Thoughts On The Week

“The human hand is the most perfect tool ever devised.” — Jacob Bronowski

For decades, the promise of warehouse automation has revolved around speed, scale, and consistency. Robots have become faster, vision systems sharper, and software more predictive. Yet amid these advances, a fundamental capability has remained out of reach: the sense of touch. While vision has enabled robots to identify and locate objects, touch is what allows humans to truly interact with the physical world—to feel resistance, adjust grip, and handle objects of varied shapes, textures, and fragility.

Touch is not merely a vision supplement; it is essential to dexterity. From picking up a plastic bottle to stacking items with care, tactile feedback enables humans to perform tasks that require nuance. Without touch, robotic systems are limited in scope and unable to match the adaptability and finesse of their human counterparts. As automation expands into more complex domains, the need for robots to sense and respond through contact becomes increasingly urgent.

Amazon’s introduction of Vulcan marks a turning point in addressing this challenge. Vulcan is equipped with a sense of touch through force-sensitive grippers and joint sensors, allowing it to manipulate approximately 75% of the items in Amazon's fulfillment centers. This is a significant milestone not because Vulcan is perfect, but because it shows what’s possible when touch becomes part of the equation. The breakthrough validates what roboticists have long believed: to build truly useful and generalizable machines, we must teach robots to feel.

Why Touch Matters in Robotics

In human environments, touch is fundamental. It helps us gauge pressure, detect texture, and adjust our grip on fragile or slippery items without conscious thought. Most robotic systems, however, rely heavily on vision and pre-programmed motion, making them brittle when faced with the unpredictability of real-world handling. A cereal box slightly dented or a slippery shampoo bottle can throw off a vision-only system.

Touch fills that gap. By incorporating force, pressure, and tactile sensors, robots can dynamically adapt to shape, weight, and texture variations, just as a human would. This capability is particularly vital in warehouses, where the variety of items is immense, and subtle differences between packages can significantly impact how they should be grasped, lifted, or placed.

The Vulcan Breakthrough

Amazon’s Vulcan robot is a compelling advancement in this direction. Introduced last week to the world, Vulcan has force-sensitive grippers and joint sensors that simulate a sense of touch. These allow the robot to respond in real time to an object's resistance and feel. With this capability, Vulcan can now handle approximately 75% of the items in Amazon’s fulfillment centers—a dramatic improvement over previous robots that struggled with oddly shaped or delicate items.

Vulcan's design integrates sensor-rich joints and grip feedback loops, allowing it to adjust its hold on an item depending on how it deforms or shifts. It no longer needs to "see" every aspect of an item perfectly to manipulate it effectively. This reduces the need for precision placement and scanning and makes the robot more resilient to environmental variability.

Other Touch-Enabled Robotics Projects

Amazon isn’t alone in this quest. Several other companies and research institutions are developing robots with tactile capabilities:

Shadow Robot Company has developed a highly dexterous robotic hand with tactile sensors embedded in the fingertips, capable of manipulating delicate objects like eggs or fruit.

MIT’s GelSight project uses high-resolution tactile sensors that capture objects' surface texture and contours on contact, enabling fine manipulation tasks.

Soft Robotics Inc. uses adaptive soft grippers infused with sensors to mimic the compliant, flexible nature of human touch, allowing them to handle various consumer products.

These projects show the expanding frontier of touch-enabled robotics across industries—from retail to healthcare to agriculture.

What’s Still Missing

Despite this progress, robotic touch is still in its early stages. The human hand has over 17,000 touch receptors; replicating that fidelity in machines is technically and economically challenging. Most tactile sensors today can detect gross pressure or contact but struggle to interpret fine-grained data like texture, temperature, or the feeling of slipping.

Furthermore, integrating touch into real-time robotic control loops remains difficult. Robots must sense, interpret, and act on tactile feedback within milliseconds. This requires advanced algorithms, low-latency hardware, and robust machine learning systems trained on diverse handling scenarios.

There are also challenges in scaling. Tactile sensors tend to be fragile or expensive, and many are not yet hardened for the industrial environments found in warehouses. Durability, cost, and ease of integration will be critical to widespread adoption.

The Future of Robotic Touch

The arrival of Vulcan marks an important milestone. It signals a shift in how robotic systems are built and what is expected. Instead of relying solely on cameras and coded routines, next-generation robots will need to feel their way through the world, learning from tactile interactions like humans do.

Touch may be the missing link that bridges today’s functional automation and tomorrow’s collaborative, adaptive robotics. As Vulcan and its peers continue to evolve, we can expect more agile warehouse systems, safer human-robot interactions, and perhaps even more general-purpose machines that can move from sorting packages to assisting with daily living.

For now, the sense of touch is no longer exclusive to humans. That could be a very good thing in a world increasingly shaped by automation.

Robot News Of The Week

Persona AI Raises $27M Oversubscribed Pre-Seed to Deliver the Future of Humanoid Robotics

Persona AI has raised $27M in an oversubscribed pre-seed round to accelerate the development of its humanoid robots for industrial use, including shipbuilding and manufacturing. Founded in 2024 by robotics veterans, Persona is partnering with HD Hyundai to deploy robots in shipyards within 18 months. With a robotics-as-a-service model, the company aims to solve labor shortages and improve workplace safety. Backed by Unity Growth, Tides Ventures, and others, Persona is targeting a share of the projected $3 trillion U.S. humanoid labor market. Investors see the company as a standout in industrial automation with early traction and a bold vision.

DHL buying 1,000+ Stretch robots from Boston Dynamics

DHL Group is expanding its partnership with Boston Dynamics, planning to deploy over 1,000 Stretch robots by 2030 to boost warehouse automation. Initially used for container unloading, Stretch will soon take on case picking—one of DHL’s most labor-intensive tasks. The companies have collaborated since 2018, with Stretch already deployed in North America and Europe. DHL has invested €1 billion in automation and operates over 7,500 robots globally. This move reflects DHL’s push for smarter, more resilient logistics, with Stretch poised to become a versatile solution across operations as part of the company’s broader digital transformation strategy.

Robot Research In The News

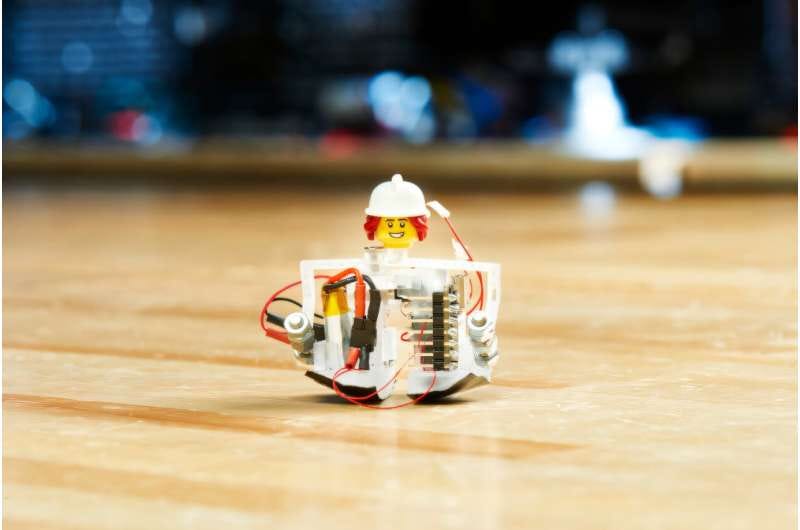

Carnegie Mellon researchers have developed “Zippy,” the world’s smallest self-contained bipedal robot—just 1.5 inches tall. Despite its tiny size, Zippy can walk, turn, skip, and climb steps using only its onboard battery, actuator, and control system. It moves at a remarkable 10 leg lengths per second, equivalent to a human running 19 mph. Designed for tight or hazardous environments, Zippy could aid in search and rescue, inspection, or scientific exploration. Led by professors Aaron Johnson and Sarah Bergbreiter, the project aims to advance miniature legged robot design for real-world applications, with plans to add sensors and enable autonomous swarm navigation.

Whole-body teleoperation system allows robots to perform coordinated tasks with human-like dexterity

Stanford and Simon Fraser researchers have developed TWIST, a real-time teleoperation system that lets humanoid robots imitate full-body human movements with high accuracy. Using motion capture and AI, TWIST enables robots to perform complex tasks like lifting, walking, and even dancing. Tested on robots like Unitree’s G1, the system showcases potential for training robots in real-world environments. The long-term goal: collect large-scale human motion data to teach robots autonomous, human-like dexterity for tasks in hazardous or high-precision settings.

Robot Workforce Story Of The Week

Learning with Robots, and Code That Drives Them, Opens Future for Students

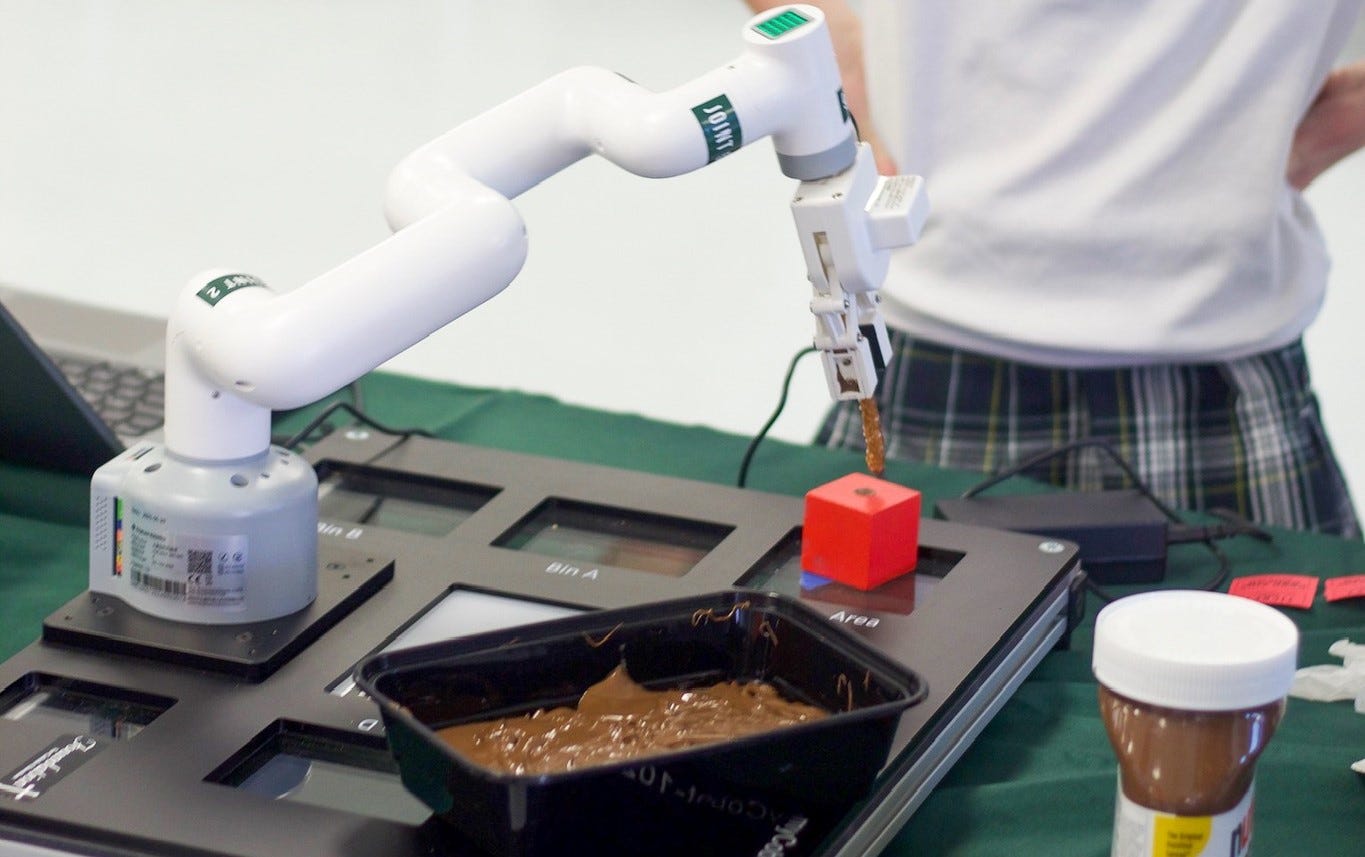

A new robotics initiative is transforming Catholic elementary schools across the Archdiocese of Philadelphia. Launched in 2024 and supported by the Foundation for Catholic Education and CTDI chairman Jerry Parsons, the program has expanded to 37 schools, with 20 more joining soon. Students from kindergarten to 8th grade learn coding through robot arms and “Marty the Robot” devices, gaining early exposure to skills used in industry. The program boosts engagement, creativity, and problem-solving while strengthening the STREAM curriculum. Parsons’ support and educators’ dedication are helping prepare students for a tech-driven future grounded in faith and innovation.

Robot Video Of The Week

Automate was this week in Detroit. Here are just some of the awesome robots that were on the expo floor.

Upcoming Robot Events

May 17-23 ICRA 2025 (Atlanta, GA)

May 18-21 Intl. Electric Machines and Drives Conference (Houston, TX)

May 20-21 Robotics & Automation Conference (Tel Aviv)

May 29-30 Humanoid Summit - London

June 9-13 London Tech Week

June 17-18 MTC Robotics & Automation (Coventry, UK)

June 30-July 2 International Conference on Ubiquitous Robots (College Station, TX)

Aug. 17-21 Intl. Conference on Automation Science & Engineering (Anaheim, CA)

Sept. 15-17 ROSCon UK (Edinburgh)

Sept. 23 Humanoid Robot Forum (Seattle, WA)

Sept. 27-30 IEEE Conference on Robot Learning (Seoul, KR)

Sept. 30-Oct. 2 IEEE International Conference on Humanoid Robots (Seoul, KR)

Oct. 6-10 Intl. Conference on Advanced Manufacturing (Las Vegas, NV)

Oct. 15-16 RoboBusiness (Santa Clara, CA)

Oct. 19-25 IEEE IROS (Hangzhou, China)

Oct. 27-29 ROSCon (Singapore)

Nov. 3-5 Intl. Robot Safety Conference (Houston, TX)

Dec. 11-12 Humanoid Summit (Silicon Valley TBA)