The Art of the Grasp

Why Robotic Manipulation Will Make or Break the Future of Automation

"What the hand does, the mind remembers." – Maria Montessori

When people think of robots, they often picture humanoids walking upright or autonomous vehicles gliding through city streets. But in the real world, whether in factories, warehouses, restaurants, or disaster zones, the difference between a helpful robot and an expensive statue usually comes down to one core capability: manipulation. Can it reliably grasp, handle, and interact with the world around it? If not, it may never leave the lab.

As robots continue to move from controlled environments into the messy, unpredictable spaces humans occupy, the ability to manipulate objects safely and repeatably is becoming a defining frontier for the industry. It’s not just about picking and placing anymore. It’s about doing, from folding laundry to making coffee, from sorting recyclables to performing elder care assistance. The future of robot manipulation lies at the heart of whether robotics will truly scale beyond niche deployments.

Beyond the Arm: Industry Needs Are Evolving

In the industrial sector, robotic arms have long been a staple on assembly lines. But those arms have mainly been caged, operating in structured environments where every item arrives in the same place, at the same angle, thousands of times a day. That era is ending. Modern manufacturing and logistics demand flexibility. Parts are coming in varied orientations. Orders are changing by the hour. Human workers are shifting from manual tasks to oversight roles. The robots must adapt.

Mobile manipulators, robots that can both move and handle objects, are now being called on to do tasks once thought impossible. Imagine a robot that can navigate a warehouse, identify a damaged product, pick it off a shelf, and replace it. Or one that can remove a burr from a metal casting, open a drawer, and grab the correct tool. These systems require not only dexterity but also context awareness, adaptability, and autonomy. They must learn to see, feel, and react, and they must do it safely and consistently.

Into Public Spaces: From Labs to Lobbies

Outside of industry, manipulation is poised to redefine service robotics. Think about elder care robots assisting with meals, trash collection bots on urban streets, or robotic assistants in hotels and airports. None of these applications is viable without highly reliable manipulation. Even a simple act, such as handing someone a coffee cup, requires precise control, compliant force application, and real-time feedback. Without these abilities, robots in public spaces risk being awkward, unsafe, or simply ineffective.

The same goes for robotics in disaster response, agriculture, and healthcare. From cutting vines in a vineyard to clearing rubble after an earthquake, the ability to physically interact with unpredictable environments is critical. That’s why manipulation is no longer a side feature; it’s the main event in the push to integrate robotics into everyday life.

Performance, Repeatability, and the Standards That Matter

If robotic manipulation is to become a reliable tool across industries, we need a common understanding of what “good” looks like. That means measurable performance. How accurately can a robot grasp an object of a specific shape and weight? How consistently can it do so over 1,000 cycles? How does its performance degrade in real-world conditions? How about dust, glare, or temperature changes?

This is where standardization comes into play. Performance measurement and repeatability must be codified, not only for benchmarking but also to ensure interoperability and guide procurement, insurance, and regulatory oversight. The ASTM F45 committee, for instance, is developing test methods and practices to characterize the robot manipulation capabilities of mobile systems. Standards like F3713-25, focused on documenting the configuration of test workpieces, lay the groundwork for repeatable, comparable, and trusted evaluations.

Without standards, progress becomes anecdotal. We end up with systems that might work in a demo, but fail in deployment. With standards, we get transparency. We get a shared language. We get trust.

Safety Is Not Optional

As manipulation capabilities increase, so do the risks. A robot arm that can lift 20 pounds and operate at high speed is also capable of causing serious injury. And it’s not just about physical harm; grippers can crush delicate items, drop valuable payloads, or accidentally trigger machinery.

This is why safety standards specific to manipulation are urgently needed. Collision detection, force limits, and failure handling aren’t afterthoughts. They’re prerequisites for real-world use. As robots become more collaborative, working alongside humans, the expectations for safe and predictable behavior will only continue to grow.

That means we need not only hardware safeguards but software validation, system-level testing, and operational standards tailored to different use cases. Just as the automotive industry matured through safety ratings, fail-safes, and test protocols, the robotics sector must do the same, especially in manipulation, where interaction with people and objects is unavoidable.

Why This Matters—Even If You Don’t See It

Many companies in robotics and automation understand that manipulation is essential, but they struggle to articulate why. That’s because manipulation isn’t flashy. It’s not a bold headline or a sleek demo. It’s the boring, vital backbone of functionality. You don’t notice good manipulation, you see its absence.

When a warehouse robot mishandles a box and stops the line, when a service bot spills a drink, or a medical assistant drops a syringe during a procedure, manipulation is the difference between trust and rejection, between growth and stagnation.

For robotics to reach its potential, manipulation must be taken seriously, not just as a technical challenge, but as an enabler of real value. It’s what makes robots more than machines. It’s what lets them do.

The Road Ahead

There’s no silver bullet in robotic manipulation. Progress will come from better hardware, more intelligent AI, tighter integration between sensors and control systems, and, critically, clear performance benchmarks. Industry, academia, and standards bodies must continue to collaborate to define, test, and enhance the capabilities of robotic manipulation.

We’re at an inflection point. Just as mobility broke open the world of autonomous vehicles, manipulation is now opening the door to broader robotic integration. The companies, governments, and technologists who invest in this capability today will define the automation landscape of tomorrow.

So the next time you see a robot pick up a tool, fold a towel, or offer a helping hand, remember that’s not just a gimmick. That’s the future, gripping it one object at a time.

Robot News Of The Week

Google rolls out new Gemini model that can run on robots locally

Google DeepMind has launched Gemini Robotics On-Device, a new version of its robot control AI that runs directly on robots—no internet required. It builds on the original Gemini Robotics model released in March and can be fine-tuned using natural language.

In demos, robots using the model performed tasks like folding clothes and unzipping bags. Despite being trained on Google’s ALOHA robots, the model also worked well with the Franka FR3 and Apptronik’s Apollo humanoid, even handling unfamiliar tasks like industrial assembly.

Google also introduced a Gemini Robotics SDK, allowing developers to train robots on new tasks with just 50–100 demonstrations using the MuJoCo simulator.

Other companies jumping into robot AI include Nvidia, Hugging Face, and Korean startup RLWRLD, all working on foundation models for robotics.

Inbolt to bring its real-time robot guidance systems to the U.S., Japan

Paris-based Inbolt, a developer of AI-driven 3D vision systems for robots, is expanding into the U.S. and Japan. Already used by major manufacturers like Ford, Volkswagen, Renault, and Stellantis, Inbolt’s tech allows robots to quickly adapt to changing tasks without retooling.

Its GuideNow system, powered by AI and a single Intel RealSense camera, enables real-time part localization and trajectory updates, even on moving lines or cluttered pallets. Robots trained on CAD files in under five minutes can integrate with systems from FANUC, ABB, KUKA, and Universal Robots—without the need for costly sensors.

Backed by a $17M Series A in 2024, Inbolt is launching teams in Detroit and Tokyo, supporting demand from reshoring efforts in the U.S. and Japan’s advanced manufacturing sector. Deployed in over 50 factories, Inbolt claims its systems have powered 20+ million robot cycles in the first half of 2025, cutting downtime by up to 97% and part rejection rates by 80%.

As COO Albane Dersey puts it, “To build a factory that never stops, you need machines that can truly see.”

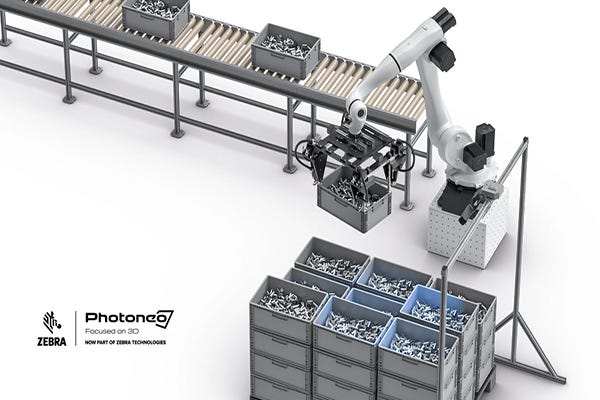

Photoneo unveils AI bin picking software update, new 3D blue laser sensor

Photoneo, now part of Zebra Technologies, unveiled two major innovations at Automatica 2025 in Munich this week: an upgraded bin picking software and a new 3D sensor. The software—Bin Picking Studio 1.10 and Locator Studio 1.3—introduces AI-driven features, such as dedicated tote detection and multi-neural network switching, enabling faster and more accurate object recognition even in complex or distorted environments.

The new 3D sensor, building on the MotionCam-3D Color (Blue), uses advanced blue laser technology to improve precision scanning over longer distances and with more difficult materials. Photoneo states that these enhancements reduce scan times, improve 3D data completeness, and facilitate faster integration across a growing range of robot brands.

CTO Svorad Stolc describes the updates as a significant advance in robotic intelligence, enabling broader automation opportunities with simpler setup and improved performance in logistics, manufacturing, and more.

Robot Research In The News

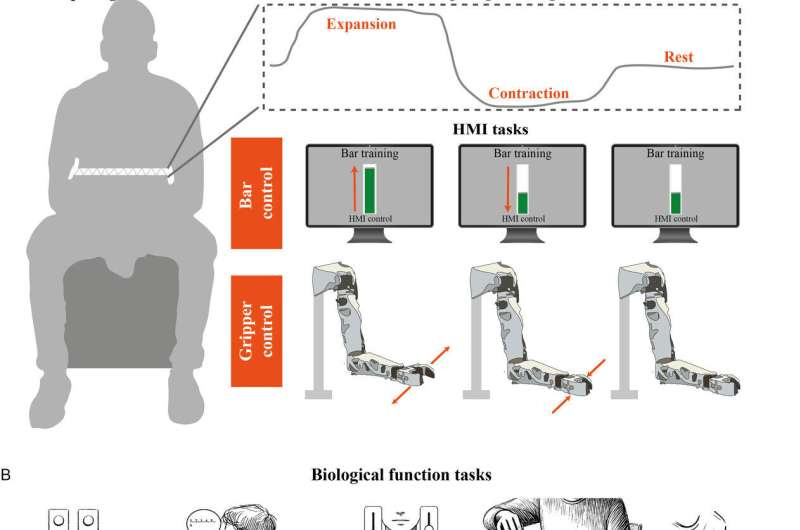

Exploring skill generalization with an extra robotic arm for motor augmentation

A new study in Advanced Intelligent Systems shows that the brain can learn to control an artificial third arm and even generalize simple tasks with it—offering promising insight into future applications for surgeons, first responders, and rehabilitation.

In the experiment, 20 participants used a robotic arm-controlled by breathing via a diaphragm belt—to move blocks and press buttons. Researchers found that, with training, participants could generalize movements from one task to another, much like how we learn with our natural limbs. However, multitasking with the artificial arm remained challenging, and generalization decreased as tasks became more complex.

While the current control method is basic, using breath instead of brain signals, researchers hope future non-invasive tools, like scalp electrodes, will improve precision. For now, the real promise may lie not in human augmentation, but in understanding how the brain adapts—knowledge that could aid stroke recovery and other neurorehabilitation efforts.

Robot Workforce Story Of The Week

Philly’s student robotics scene is running on empty

Just a few years after celebrating a significant funding boost, Philadelphia’s school robotics programs are now shrinking due to inconsistent support. Since a major contract between the Philadelphia Robotics Coalition (PRC) and the School District of Philadelphia expired in 2024, many student teams lack the resources to compete or continue.

The number of robotics teams in city schools has dropped from a peak of 131 to just 73 this past school year. Coaches say students now face unequal footing in competitions, and teachers often go unpaid for coaching time. PRC, once able to support events and high school teams, now focuses mainly on K–8 programs with help from small grants and partners like the Department of Defense and charitable foundations.

Despite limited district support, the community is stepping up. Programs like Caring People Alliance’s SeaPerch team, supported by Temple University, continue to thrive outside school walls. PRC hopes to expand robotics access through local libraries and community centers to keep opportunities alive for all Philly students.

Robot Video Of The Week

Shenzhen, in south China's Guangdong Province, is quickly emerging as the world’s most dynamic hub for humanoid robotics. Already known as the "Silicon Valley of Hardware," the city is home to a staggering 74,000 robotics companies, forming a dense ecosystem of suppliers, startups, and AI innovators.

Firms like UBTech, Fourier Intelligence, and Unitree Robotics are actively building and testing humanoids for use in logistics, manufacturing, and even elder care. What gives Shenzhen an edge is its unmatched ability to iterate quickly—thanks to deep local talent, access to components, and strong government backing.

With China naming humanoid robots as a strategic priority, Shenzhen is becoming the launchpad for scaled deployment. While global players make headlines, Shenzhen may be the first city where humanoid robots are truly put to work.

Upcoming Robot Events

June 30-July 2 International Conference on Ubiquitous Robots (College Station, TX)

Aug. 17-21 Intl. Conference on Automation Science & Engineering (Anaheim, CA)

Aug. 25-29 IEEE RO-MAN (Eindhoven, Netherlands)

Sept. 3-5 ARM Institute Member Meetings (Pittsburgh, PA)

Sept. 15-17 ROSCon UK (Edinburgh)

Sept. 23 Humanoid Robot Forum (Seattle, WA)

Sept. 27-30 IEEE Conference on Robot Learning (Seoul, KR)

Sept. 30-Oct. 2 IEEE International Conference on Humanoid Robots (Seoul, KR)

Oct. 6-10 Intl. Conference on Advanced Manufacturing (Las Vegas, NV)

Oct. 15-16 RoboBusiness (Santa Clara, CA)

Oct. 19-25 IEEE IROS (Hangzhou, China)

Oct. 27-29 ROSCon (Singapore)

Nov. 3-5 Intl. Robot Safety Conference (Houston, TX)

Dec. 11-12 Humanoid Summit (Silicon Valley TBA)