The Geopolitics of Teleoperated Robots

Outsourcing Touch: Power, Labour, and the Politics of Remote Control

“All revolutions are born in the gap between what is and what could be.” — Rebecca Solnit

On a damp evening in Tokyo, a shelf of iced energy drinks is nearly empty—again. A small, compact robot hovers into view, its arm extending, grasping, and placing a fresh can with mechanical precision. Meanwhile, 3,000 kilometres away in Manila, a young operator dons a VR headset, grips twin joysticks, and guides the machine back into rhythm. For a few fleeting minutes, the machine becomes an extension of a remote human hand. The operator watches the shelf camera feed, nudges the robot slightly deeper, nudges the bottle with a microscopic adjustment, then releases. The robot records the motion, returns to autonomy, and the operator logs back into monitoring mode.

This is no sci-fi glossy—it’s real, and increasingly common. The Tokyo-based startup Telexistence has deployed robot restockers in more than 300 convenience stores in Japan, which are controlled in part remotely by Philippine-based teleoperators via its partner Astro Robotics. What used to be purely local physical labour is being offshored not only to data centres and call centres, but also into the domain of embodied automation.

Let’s explore the evolution of robot teleoperation: how the technology got here, what the engineering and human‐factors challenges remain, how safety standards and governance frameworks are changing (or need to change), and how the political economy of remote labour is being rewired. The convenience-store example is the canary in the coal mine for a broader shift—one where physical work becomes digital, global, and networked.

Tele-operation in context

The notion of tele-operated machines is far from new. Early remotely operated robots were deployed in hazardous settings, including nuclear plants, deep-sea exploration, and spacewalks. The operator sat safely far away, manipulated controls, and the robot executed physical work. What is new—and accelerating—is the extension of this paradigm into everyday commercial settings: retail stores, warehouses, logistics hubs, even homes.

In Japan, demographic decline and labour shortages have pressured retailers to innovate. Enter Telexistence’s shelf-restocking robots, deployed in Tokyo convenience stores. While the machine handles most of the repetitive bottle-placing, the “human fallback” remains: when the robot errs—dropping a can, miscalculating friction, losing grip—an operator in Manila steps in via VR or joystick to correct the fault. The operator triggers the motion, it becomes part of the robot’s dataset, and over time the company accumulates “embodied teleoperation data” to train its vision-language-action (VLA) models.

This model, where autonomy handles 80-90% but humans address the hard bottom-10% (and train the system for future autonomy), is becoming a template for many robotics companies. The Philippines’ job centre becomes a robotic control hub. The “physical worker” is now a remote “robot pilot.” As one robotics professor put it, “You’re like the substitute for the robot.” Remote hands, orbital labour, mobile offices for machines.

How the technology stacks today

At its heart, tele-operation is about bridging robot senses ↔ network ↔ human operator ↔ robot actuation, with feedback loops and shared control.

The robot has sensors (vision, depth, tactile, force).

It carries some autonomy (object detection, grasp planning, motion execution).

When unusual conditions arise (slip, misalignment, unpredictable object), the system hands off to the remote operator.

The operator sees real-time video, maybe haptic or force feedback, manipulates controls, then returns to monitoring.

All operator interventions are logged as data for machine learning.

Several engineering factors matter:

Latency: human control requires low latency (ideally <100 ms round-trip) so the operator can react smoothly.

Network reliability and jitter: packet loss or high jitter degrade precision and safety.

Shared control and fallback logic: the autonomy must know when to alert the operator and how to cede control gracefully or revert to safe-state if the network fails.

Operator workstation design: The UI (VR headset, joysticks, monitors) must reduce fatigue, cybersickness, and ensure situational awareness.

Data-capture infrastructure: high-fidelity logs of motion, robot state, operator commands, network metrics—all feed training pipelines to shrink the “human fallback” window.

Recent academic work shows that even with relatively modest hardware, teleoperation can produce data that enable sophisticated autonomous mobile manipulation. For example, the “Mobile ALOHA” project used whole-body teleoperation to collect motion data and boost autonomous success rates by up to 90%. The trick in retail or logistics is to replicate this scale in noisy, real-world commercial environments.

And yet, London or New York telepilot hubs, Manila call rooms for robots in Tokyo stores—this global choreography is only possible because the underlying tech stack (5G, fiber networks, VR/joystick interfaces, cloud/edge compute) has matured. The economics are shifting. The Philippines offers reasonably skilled engineers, time-zone alignment, and comparatively low cost.

But the robotics remain only capable, not omnipotent. Autonomy handles the routine; tele-operators handle the edges. Rare conditions still force human invasion. That means the system remains dependent on human skill, and the training loop remains live.

Safety standards—current and missing

When you place a robot into a retail environment—customer-facing, partially autonomous, remotely controlled—you are entering a space fraught with regulatory, mechanical, human-factors and network-integrity risk.

Existing standards help. For industrial robots working alongside humans, ISO 10218-1 (safety for robots and robotic devices) sets out protective measures, risk assessment, emergency stop, guarding, etc. Similarly ISO 13482 addresses safety of service robots in public-facing spaces. But neither explicitly deals with remote tele-operation over a network where the operator may be thousands of kilometres away, the link may jitter, the robot shares customer aisles.

A report from the British Standards Institution (BSI) on the remote operation of vehicles outlines two particularly relevant domains: operator performance/training, and workstation HMI standards. While vehicle‐centric, it provides a template for tele-operated robots. Still, few standards require automatic fallback from the human state to a safe state when network health falls below a threshold, or “how long can the link be interrupted before the robot must safely halt?” Or “what operator fatigue limits ensure consistent performance for remote robot pilots?”

In short, the gaps include:

Explicit annexes that treat the remote operator as a safety function, with shift-length limits, recertification, and scenario-based evaluation.

Data-logging standards for teleoperation: capturing network metrics, video, control inputs, and robot state for audit and incident reconstruction.

Network-health resilience rules: thresholds, watchdog logic, fallback behaviour.

Cybersecurity requirements for networked robots with human links: identity, encryption, certificate revocation, and malicious actor mitigation.

Cross-jurisdiction liability frameworks: multiple parties (robot manufacturer, network provider, remote operator employer, local retailer) may share risk, but standards are fragmented.

In the convenience-store setup described earlier, remote operators in Manila monitor dozens of robots; they take over when an intervention is required—roughly 4 % of the time. The opportunity to require standard compliance with workspace ergonomics, fatigue monitoring, and certification is ripe.

Expanding further, think of: a customer reaches to pick an item at the same time the robot is moving its arm; the robot hesitates; network packet loss; the operator cannot intervene in time—who is accountable? The store, the robot vendor, the remote-operator firm, the network provider?

Standards and regulations must evolve from “robot safety in static industrial cells” to “robot plus remote human plus network plus public space” safety ecosystems.

Politics, labour and globalisation

The Manila control-centre story is more than an engineering curiosity—it is a political and economic bellwether.

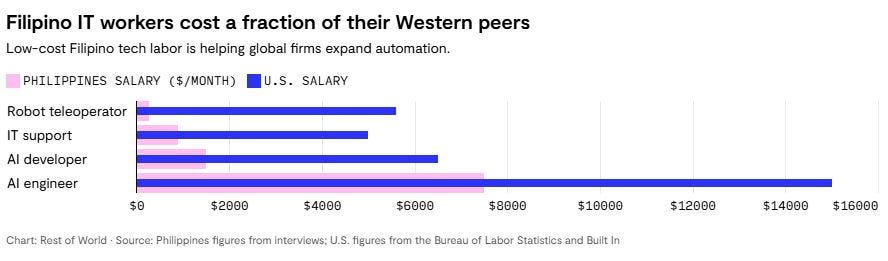

From one vantage point: The Philippines (already strongly positioned as a global outsourcing hub) is now offering higher-value remote work —not just call centre or back-office tasks, but real-time, embodied teleoperation of robots. As the article in Rest of World recounts, about 60 young operators monitor and control robots for Japanese convenience stores, earning USD $250-315 per month. They monitor ~50 robots each, stepping in only a few percent of the time to intervene. It’s a new kind of “robot watching,” a hybrid human-machine job.

From another vantage: This is “offshoring automation.” The traditional concern in developed countries has been that robots will replace workers. But here the robot stays in Japan, and the human labour is outsourced to Manila. One academic commented: “Automation was expected to reduce the number of jobs locally but raise the demand for skilled workers … but by off-shoring those jobs, you have a double whammy in an economic sense.”

The implications are thick.

Host countries (e.g., Japan) get the productivity gains and retain control of the physical assets, but local jobs (especially low-skilled ones) may shrink further—or shift hours, locations, and modalities.

Teleoperators in developing countries are doing more complex, real-time work—but under contract, at relatively low pay, without the protections of traditional employment or long-term career guarantees.

The data pipelines they feed may ultimately reduce the need for human oversight, raising the spectre of “automation in our time” from their vantage point.

Geopolitically, the concentration of remote-control hubs in low-cost countries raises questions about resilience (typhoons, power outages, network failures) and sovereignty (who monitors the monitors?).

Ethically, workers become remote monitors of machines doing “their jobs”—which may erode agency, job satisfaction, and skill development.

Local labour commentators in the Philippines highlight these tensions. Some see the new roles as an opportunity: a path into robotics, AI, motion-data science. Others warn of a treadmill: “We are building the tools that may replace us later.” On the store side, customers may never know that while a robot is shelving drinks in Tokyo, the “pilot” is in Manila. The new global division of physical labour becomes invisible—but consequential.

Regulators and policy makers must ask: What happens to workers when their job is “become the human in the loop for machines” and then the machine learns to do the loop itself? What duties do companies owe these remote pilots? Should they be treated as full-time employees, contractors, protected analysts? Should automation-data rights accrue to them? Should there be disclosure (e.g., “this robot is remotely supervised overseas” signage)?

What the future holds

Looking ahead, three major trajectories seem clear.

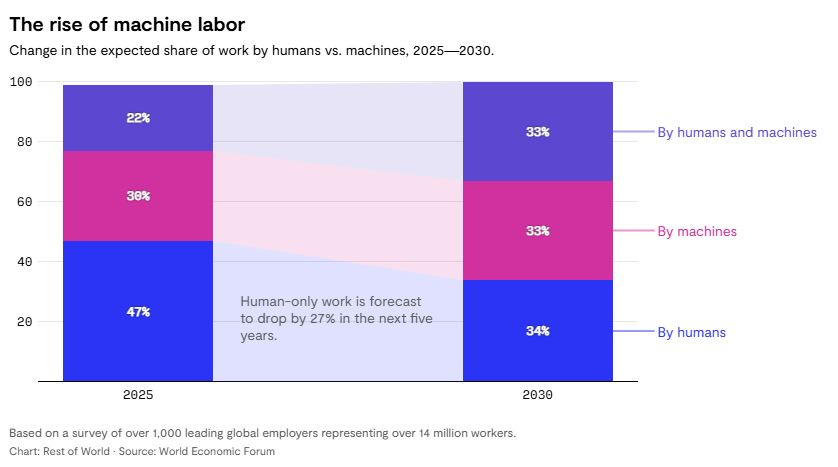

First: Autonomy will gradually creep, tele-operator volumes will shrink—but not vanish. Robotics firms are collecting tele-operation data not only to correct errors in the moment, but to train foundation models of physical intelligence (grasping, motion, manipulation). Telexistence’s partner disclosures show that the aim is to shift manual tele-operation tasks into fully autonomous operations. But as many robotics practitioners caution, the “last 10%” of tasks—unpredictable objects, dynamic human environments, subtle physical forces—may require human in-the-loop for a long time. Genuine full autonomy may remain elusive, or economically unjustified, in many retail settings.

Second: Standards will mature, but unevenly. In the next 2–5 years, we can expect dedicated annexes for tele-operation: operator certification, network reliability as a safety control, remote-pilot workstation ergonomics, and audit-logging rules. Industry groups (robotics, retail) may adopt them, but adoption may vary globally. Countries with strong labour-safety frameworks (Japan, Germany) may push ahead; in lower-cost regions, the urgency may lag.

Third: Honest reckoning of labour and governance. As remote tele-operation scales, public discussions will focus less on “robots vs humans” and more on “who works where, how are they paid, what rights do they have, how transparent is the chain of control?” In countries where tele-op jobs become the frontline of automation, ensuring career pathways, upskilling, contract protections, and a say in data flows will become pressing. On the store/customer side, notions of visibility and consent may emerge: should customers know that a worker halfway across the world is controlling a robot in the aisle?

Finally: Hybrid workforces, not robot-only ones. The world of 2030 likely features retail workers, robot units, remote pilots, AI trainers, and field maintenance staff all working in an orchestrated manner. Physical infrastructure and digital labour will meld. In that context, safety standards will need to treat the system (robot + network + human operator + environment), not just the single robot arm.

Towards a safety-governance checklist

If I were advising retailers, robotics firms, and regulators today, here is what I would recommend they build:

Treat the remote operator as a safety-critical function, with selection criteria, training hours, recertification, fatigue/time-on-task limits.

Include network health in the hazard analysis: define thresholds for latency/jitter below which the system automatically shifts into “safe-state” (e.g., slow mode, pause operations).

Require event recorders, aka “black boxes” for teleoperation (video, robot state, operator inputs, network health) and establish an incident reconstruction standard.

Design operator workstations with ergonomics and HMI standards (display refresh, control latency, VR ergonomics, shift breaks) rather than treating tele-operators as cheap call-centre drones.

Enforce minimum cyber-security requirements for robot–network–operator links: encryption, identity management, credential revocation, and penetration testing.

Extend public-space robot safety standards (e.g., from ISO 13482) to tele-operated machines: signage, speed limits when under remote control, a clear visual indicator when the operator is engaged, and geofenced safe zones when the remote link is unstable.

Labelling/disclosure: where appropriate, inform customers or staff that a remote operator controls the robot, and list escalation procedures.

Workplace fairness for remote pilots: pathways to advancement, transparency about data-use, protections against deskilling, and clarity on how tele-op roles may evolve as autonomy improves.

Concluding thoughts

The image may surprise: a Filipino student in Manila steadily monitoring robots as they fill shelves in Japan. But it also captures a profound shift in the underpinnings of work: physical tasks are becoming digital, decoupled in location, network-mediated. Tele-operation is not a stopgap; it is the connective tissue of the next generation of embodied automation.

And yet, this isn’t just about machines. It’s about humans: the remote pilot who intervenes, the worker in the store who observes but never intervenes, the modeller who consumes the data, the customer who may never know who is behind the robot’s arm. To make this real and safe, we need more than better sensors and faster 5G—we need standards that recognise the human in the loop, networks as safety systems, and a globally fair labour architecture.

As robots proliferate on retail floors, warehouses, and logistics hubs, the “operator” may sit in Bangalore, Manila, or Warsaw. The union of human discretion and robot speed will be the engine. The question is whether the safety and labour ecosystems keep pace—or whether convenience comes at a hidden cost.

In that sense, what is happening in Tokyo’s convenience stores today is a small preview of a bigger transformation. The way we govern remote work, robotic work, and network-mediated physical labour will define not only how many cans get restocked, but how we define work, agency, safety, and equity in the world of 2030.

Robot News Of The Week

Grubhub, Wonder test robotic delivery in New Jersey

Grubhub and Avride are launching a robotic delivery pilot at Wonder’s Jersey City food hall — their first autonomous delivery program beyond college campuses. The test will serve about 20 restaurant concepts and leverage Jersey City’s high population density (19,000+ residents per square mile) as an ideal environment for last-mile delivery innovation.

GrayMatter Robotics opens physical AI innovation center

GrayMatter Robotics has opened a new 100,000-sq.-ft. headquarters and innovation center in Carson, California — billed as the nation’s most advanced interactive robotics facility for AI-powered manufacturing. Founded in 2020, the company specializes in “physical AI” systems that autonomously handle complex surface-finishing tasks like sanding and polishing without programming, adapting to part variations in real time.

The new site features over 25 active robotic cells where visitors can see robots scan, learn, and process new parts autonomously — showcasing how AI and robotics combine to enhance quality and productivity. CEO Ariyan Kabir said the move reflects GrayMatter’s commitment to revitalizing U.S. manufacturing and leveraging Carson’s strong industrial ecosystem.

GrayMatter’s technology, already deployed by firms such as Pierce Manufacturing, can operate up to four times faster than manual labor, deploy in months instead of years, and requires no coding expertise. The company partners with FANUC and 3M and has raised $85 million to date, including $45 million in a Series B led by Wellington Management.

The Carson expansion will create over 100 jobs and support local training in robotics and AI. Kabir said the company’s goal is not to replace workers but to “elevate them,” making automation accessible to manufacturers of all sizes through its factory-as-a-service model.

Carbon Robotics Secures $20M for Secret AI Farm Robot Revolution

Seattle-based Carbon Robotics has raised $20 million to develop a new AI-powered farming robot, expanding beyond its acclaimed LaserWeeder—a machine that uses lasers and computer vision to eliminate weeds without chemicals or manual labor.

Founded in 2018, the company has become a major agtech innovator, cutting weed-control costs by up to 80% and saving over 100,000 gallons of herbicides across 230,000 acres. Its LaserWeeder G2, launched in early 2025, uses 36 cameras and NVIDIA chips to destroy up to 600,000 weeds per hour, aligning with global pushes for sustainable, herbicide-free farming.

The new funding will support manufacturing expansion and the development of a next-generation robot—reportedly targeting broader agricultural tasks like planting or harvesting. With this round, Carbon Robotics strengthens its position as a leader in AI-driven, sustainable agriculture, addressing labor shortages while redefining how farms of the future operate.

Robot Research Of The Week

Moth-like drone navigates autonomously without AI

Researchers at the University of Cincinnati have developed a moth-inspired drone that uses flapping wings to hover and follow light sources — all without GPS, AI, or complex programming.

Led by Assistant Professor Sameh Eisa, the team discovered that insects’ agility can be replicated using a simple extremum-seeking feedback system, which constantly adjusts wing motion in real time for stability. The drone mimics the natural movements of moths, bumblebees, and hummingbirds, using flexible wings that create lift on both up and down strokes.

The lightweight “flapper drone,” powered by independent wing motion, demonstrates how biologically inspired control systems could enable highly efficient, autonomous micro-robots for surveillance or environmental monitoring — and reveal new insights into how insects achieve such precise flight with minimal brainpower.

Liquid Crystals Boost Artificial Muscles in Robots

Researchers at the University of Waterloo have developed a new material that could act as flexible “artificial muscles” for soft robots, replacing bulky motors and pumps. By blending liquid crystals (LCs) with liquid crystal elastomers (LCEs), the team created a rubber-like compound up to nine times stronger and capable of lifting 2,000 times its own weight when heated.

The material, detailed in Advanced Materials, delivers three times more power than human muscle, enabling robots to move more naturally and safely — ideal for medical microrobots or collaborative manufacturing systems. The breakthrough offers a lightweight, programmable alternative to traditional actuators and could soon be used as 3D-printing ink for next-generation soft robotic components.

Robot Workforce Story Of The Week

Greater Manchester Invests £3.5M in Salford’s Robotics and Engineering Hub

The Greater Manchester Combined Authority (GMCA) is investing £3.5 million in the Northern England Engineering and Robotics Innovation Centre (NERIC) at the University of Salford to strengthen SME innovation and regional growth. Over the next four years, the funding will help small and medium enterprises adopt advanced manufacturing, robotics, and intelligent sensing technologies to boost productivity across Greater Manchester.

NERIC, part of Salford’s Crescent Innovation Zone, also announced Professor Maziar Nezhad as its new director and unveiled plans for a £400,000 microfabrication lab opening in 2025. The center—recently rebranded from the North of England Robotics Innovation Centre—aims to bridge academia and industry, driving real-world applications of cutting-edge engineering and robotics research throughout the North West.

Robot Video Of The Week

Unitree Robotics has unveiled its newest full-sized humanoid, the H2, in a dramatic social media debut titled “H2 Destiny Awakening.” Standing 1.8 meters (5’11”) tall and weighing 70 kilograms, the robot moves with uncanny grace — performing smooth dance routines and kung fu sequences that blur the line between mechanical precision and human fluidity.

While the video is clearly meant to impress, there’s something undeniably creepy about watching a machine this lifelike twist, kick, and pose with perfect rhythm — a reminder that the age of eerily agile humanoids is no longer science fiction, but stage-ready reality - ready or not.

Upcoming Robot Events

Oct. 27-29 ROSCon (Singapore)

Oct. 29-31 Intl. Symposium on Safety, Security, and Rescue Robotics (Galway, Ireland)

Nov. 3-5 Intl. Robot Safety Conference (Houston, TX)

Dec. 1-4 Intl. Conference on Space Robotics (Sendai, Japan)

Dec. 11-12 Humanoid Summit (Silicon Valley)

Jan. 5-6 UK Robot Manipulation Workshop (Edinburgh, Scotland)

Jan. 19-21 A3 Business Forum (Orlando, FL)

Mar. 16-19 Intl. Conference on Human-Robot Interaction (Edinburgh, Scotland)

Mar. 23-27 European Robotics Forum (Stavanger, Norway)

Mar. 29-Apr. 1 IEEE Haptics Symposium (Reno, NV)

May 27-28 Robotics Summit & Expo (Boston, MA)

June 1-5 IEEE ICRA (Vienna, Austria)

June 22-25 Automate (Chicago, IL)

Nice piece. Minsky mentioned the idea of regular remote working back in 1980: https://spectrum.ieee.org/telepresence-a-manifesto.

What's interesting to me is that this circumvents the established notion of working rights (visas) in countries, and that's a touchy subject in many parts of the world right now. How can we reconcile the notion of "we don't want immigrants taking our jobs" with "people not in the country taking our jobs"? Remote work is already commonplace with traditional overseas call centres, though they are not doing physical work in the target country. Is the remote worker scenario worse or better than "robots taking our jobs" which is where this will ultimately end up? For Japan which is historically a low-immigration country (though increasing lately due to demographically-induced labour shortages) maybe this is more acceptable than guest workers. Interesting times.

From a global human development perspective there are perhaps some opportunities for well paying jobs, but if it goes the way of data labelling in the global south we will just see exploitation.

Wow, the idea of the machine as a human extention really clicked! So insightful.