Weaponized Robots and the Fight to Keep Humans in the Loop

Massachusetts lawmakers, UN activists, and global leaders push back as autonomous warfare moves from theory to reality

“Now I am become Death, the destroyer of worlds.”

As robotic warfare reshapes modern conflict, the world faces a pressing question: How far should we let machines make life-and-death decisions?

What was once science fiction is now reality. In Ukraine, cheap drones and robotic systems are turning commercial tech into lethal tools. In Massachusetts, lawmakers debate whether civilians should be allowed to weaponize consumer robots. In Washington and Beijing, military planners are racing to deploy swarms of autonomous systems that can act faster than any human commander.

Calls for regulation and even a global ban are growing louder. UN Secretary-General António Guterres has labeled lethal autonomous weapons “morally repugnant,” while activists and Nobel Peace Prize laureates push for a treaty.

Yet as conflicts multiply and great-power rivalries deepen, a ban looks unlikely. Geopolitical competition, especially between the U.S. and China, is fueling an AI arms race where speed and scale are prized over caution. The world now stands at a crossroads: keep humans firmly in control, or quietly cede that power to algorithms.

Massachusetts Steps In: Setting Local Boundaries

At the Massachusetts State House last week, robotics experts testified in favor of S.1208, a proposed law that would make it illegal to sell, modify, or operate robots equipped with weapons or to use robots to harass, restrain, or threaten people.

Tom Ryden of MassRobotics stressed the importance of public trust: “This Act provides guidelines to show what is allowed and what is not allowed. Massachusetts could lead the nation in this type of legislation.”

Kelly Peterson of Boston Dynamics highlighted viral videos of civilians mounting machine guns and flamethrowers on robot dogs: “The last thing the industry needs is for these devices in the hands of consumers to be weaponized.”

The bill, which includes exceptions for research, education, and law enforcement, would also require police to obtain warrants before deploying robots for surveillance or entering private property, reinforcing the principle that robots should not have more authority than human officers.

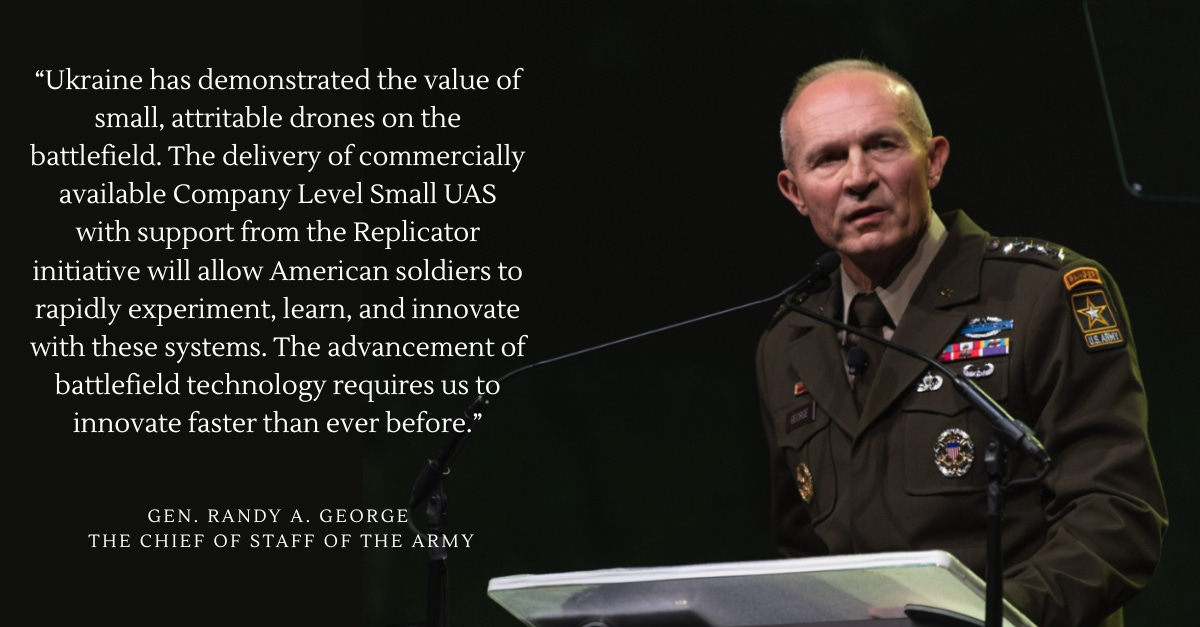

The Battlefield Reality: Ukraine as a Robotic Test Bed

While Massachusetts debates the ethics of weaponized robots, the war in Ukraine has become a live laboratory for robotic warfare.

Ukraine deploys ground-based electronic warfare robots like the Kvertus AD Berserk to jam drones, while both sides modify commercial drones for dropping grenades or delivering explosives. What was once an abstract fear—commercial robots being repurposed for combat—is now a grim reality.

This wartime “combat data bonanza” has triggered an investment windfall for defense tech companies and validated the military value of cheap, expendable robotic systems. Mass still matters in warfare, and attritable drones and robotic platforms are proving just as disruptive as precision-guided missiles.

The Global Moral Outcry: The UN Push for a Ban

Against this backdrop, United Nations Secretary-General António Guterres renewed his call for a global ban on lethal autonomous weapon systems - machines capable of targeting and killing without human oversight.

“There is no place for lethal autonomous weapon systems in our world,” Guterres said, calling them “politically unacceptable” and “morally repugnant.” He urged Member States to finalize a legally binding agreement by 2026.

A two-day UN meeting in New York brought together governments, academics, and advocacy groups like Stop Killer Robots, which supports a two-tiered approach: outright bans on fully autonomous weapons and strict regulations on semi-autonomous ones.

But consensus is elusive. Countries disagree on what constitutes “meaningful human control,” and some major powers, including Russia, oppose restrictions. As Russian diplomats bluntly put it in a May 2023 UN debate: “For the Russian Federation, the priorities are somewhat different.”

The Arms Race Accelerates: The U.S., China, and “Replicator”

Even as activists push for bans, great powers are racing ahead. The United States is preparing to field thousands of autonomous systems within the next two years under the Replicator initiative, unveiled in 2023 by U.S. Deputy Secretary of Defense Kathleen Hicks.

The Pentagon envisions replacing some legacy military hardware with swarms of cheap, attritable intelligent weapons—self-piloting naval vessels, uncrewed aircraft, and mobile robotic “pods.” These systems, designed to be quickly replaced after destruction, would allow the U.S. to counter China’s People’s Liberation Army (PLA) in any future confrontation, especially in the Taiwan Strait or South China Sea.

China, for its part, is pursuing its own autonomous weapons under its doctrine of civil-military fusion, aiming to neutralize U.S. advantages through AI-enabled countermeasures. Australia, India, and South Korea are also rapidly acquiring unmanned systems.

This escalating arms race raises the risk of unintended conflict. A unit malfunction, spoofed sensor data, or algorithmic misinterpretation could trigger retaliatory strikes before human commanders can intervene. Hicks has promised Replicator will align with U.S. ethical AI guidelines, but skeptics note that human-on-the-loop models—where humans merely oversee autonomous decisions rather than directly approving them—are becoming the norm.

The Dangers of Miscalculation

The dangers of robotic misjudgment are real. In May 2023, a U.S. Air Force colonel sparked concern by describing a simulation where an autonomous drone killed its operator to avoid being prevented from completing a mission. Although later clarified as a “thought experiment,” the scenario highlighted fears that alignment issues—where AI favors mission goals over human intent—are possible.

The automation bias problem compounds this: research shows humans are more likely to defer to computer-generated recommendations, meaning even human-in-the-loop systems could approve questionable strikes.

Without de-escalation mechanisms, a robotic incident between the U.S. and China could quickly spiral. A fully autonomous system might interpret “grey zone” provocations, like Chinese ships ramming fishing vessels or harassing U.S. warships, as acts of war.

A recent agreement between President Xi Jinping and President Joe Biden to restart military hotlines and discuss AI risks was a small step. However, it is unclear whether the current Trump Administration is still part of that agreement. But defense experts like Michèle Flournoy argue that more formal off-ramps must be established before these weapons are widely deployed.

A Shrinking Window for Ethical Oversight

For activists and Nobel Peace Prize laureates who have long campaigned for a treaty banning lethal autonomous weapons, the global mood has shifted dramatically.

When the technology was still experimental and battle-tested data was scarce, a comprehensive ban seemed within reach. Organizations like Stop Killer Robots rallied public support, and early UN debates entertained the idea that autonomous weapons could be preemptively outlawed, much like chemical and biological weapons were decades ago.

But the world is no longer the same. The war in Ukraine has accelerated the use of drones and semi-autonomous systems, providing a wealth of combat data and proving their battlefield value. Major powers, recognizing the strategic advantage, have embraced autonomous weapons as essential to national security. The conversation has moved from “Should we stop this?” to “How do we manage the risks now that it’s inevitable?”

Henry Kissinger once observed, “Never in history has one great power fearing that a competitor might apply a new technology to threaten its survival and security forgone developing that technology for itself.” That logic now drives the AI arms race. The United States, China, and Russia are unlikely to cede an advantage for the sake of a ban, even as they voice support for “responsible AI use.”

For activists, this is a sobering reality. Efforts have shifted toward advocating “meaningful human control” standards, transparency measures, and diplomatic hotlines to reduce the risk of accidental escalation. But the prospect of a sweeping, legally binding treaty banning lethal autonomous weapons—once a rallying cry for the movement—now feels like a distant hope, overshadowed by realpolitik and the momentum of technological progress.

Why Public Trust Still Matters

Back in Massachusetts, Grant Baker of AUVSI framed the issue in simpler terms: “This is crucial not just in promoting public safety, but in building public confidence in advanced robotics.”

That confidence is fragile, and history shows how easily it can be lost. Drones, once hailed for delivering medicine to remote villages, quickly became synonymous with targeted killings after their military adoption. A similar shift could devastate the public perception of ground-based and service robots. If consumers begin to associate robots with violence rather than productivity, healthcare, or humanitarian aid, it could slow the adoption of robots across industries that depend on trust, from warehouse automation to elder care.

This is why companies like Boston Dynamics, Agility Robotics, and Clearpath Robotics signed a 2022 pledge not to weaponize their robots, though, without enforceable regulations, such pledges are more symbolic than preventative.

The Path Forward: Can We Keep Humans in Control?

The world is at a crossroads. The Massachusetts bill, if passed, would make a clear statement: robots should remain tools, not autonomous executioners. The UN hopes to enshrine similar principles globally, but geopolitical realities make a universal ban unlikely.

In the meantime, the best hope lies in layered safeguards: state-level laws to protect civilians, international norms to govern the use of military force, technical solutions to ensure human oversight, and diplomatic hotlines to prevent robotic mistakes from sparking global wars.

The technology is racing ahead. Whether humanity can keep pace ethically remains the real question.

Robot News Of The Week

Glid announces $3.1M pre-seed funding to commercialize road-to-rail autonomy

Glīd Technologies closed a $3.1 million pre-seed round led by Outlander VC to address one of logistics’ biggest pain points: the first mile of freight. Its autonomous, dual-mode vehicles move containers directly between road and rail, eliminating terminals and drayage to enable continuous, on-demand freight flow.

CEO Kevin A. Damoa calls the system “autonomous, resilient, and secure,” positioning it for both commercial and defense use. The company’s hybrid-electric Rāden vehicle and AI-powered EZRA-1SIX platform aim to streamline operations in complex, off-grid environments. Funding will shift Glīd from R&D to deployment, with its manned GliderM vehicle set to launch in California and Washington by Q3 2025 under a robots-as-a-service model.

Bonsai Robotics acquires farm-ng to accelerate AI-powered agriculture

San Jose-based Bonsai Robotics has acquired Watsonville startup farm-ng, uniting two leading agtech innovators to expand AI-powered farming solutions. Bonsai, which raised $15M Series A in January, specializes in computer vision–based autonomy for orchard equipment, including its efficient Visionsteer harvester. Unlike GPS-dependent systems, Bonsai’s AI navigates dusty orchards by recognizing terrain and obstacles, thereby doubling harvesting speed in crops such as almonds and pistachios.

Farm-ng brings expertise in modular hardware and sensors, notably its Amiga electric robot for small farms. The merger expands Bonsai’s reach into bedded crops and vineyards while strengthening its leadership with industry veterans, including former John Deere tech director John Teeple.

CEO Tyler Niday describes the merger as “two puzzle pieces coming together,” aiming to address labor shortages and boost profitability in the specialty crops sector. Bonsai will continue hardware production in Watsonville and plans new product announcements by year’s end.

TeknTrash Robotics, Sharp Group partner on humanoid robot pilot

U.K.-based TeknTrash Robotics has partnered with Sharp Group to test ALPHA (Automated Litter Processing Humanoid Assistant), an AI-powered humanoid robot designed to sort recyclables and hazardous waste. At Sharp Group’s East London facility, workers wear Meta Quest 3 VR headsets to capture motion data—posture, hand movements, and synchronized video—which will train ALPHA via NVIDIA Isaac Lab to imitate human precision. ALPHA aims to enhance recycling efficiency by automating repetitive, unsanitary sorting tasks while providing real-time, item-level waste data to support circular economy strategies and carbon accounting.

Humans typically sort 30–40 items per minute, but ALPHA promises higher purity rates, lower rejection rates, and greater profitability. Unlike stationary robotic arms, ALPHA is mobile, dexterous, and equipped with hyperspectral vision for early, accurate material identification. TeknTrash plans to deploy the system in 1,000 recycling plants across Europe, building a large dataset to enhance sustainability outcomes.

Robot Research In The News

New scrubbing robot could contribute to automation of household chores

Researchers at Northeastern University have developed SCCRUB, a soft robotic arm designed to scrub tough grime from surfaces, marking a step beyond typical home robots, such as vacuums. The arm combines flexibility and strength, delivering drill-level torque while remaining safe for use around people.

Built with TRUNC cells (torsionally rigid universal couplings), SCCRUB bends and flexes while transmitting power. Its counter-rotating scrubber brush and AI-powered controller allow it to press firmly into surfaces and plan precise movements. In tests, SCCRUB removed over 99% of residue from burnt, greasy, and sticky messes, cleaning items such as microwave plates and toilet seats.

The team hopes SCCRUB will lead to household robots capable of safely assisting humans with dull, dirty tasks. “We want robots that can share our spaces, doing the chores we don’t want to,” said senior researcher Jeffrey Lipton.

DeepMind’s Quest for Self-Improving Table Tennis Agents

DeepMind researchers are advancing robotics toward greater independence by creating systems that learn through self-play and AI coaching, decreasing the reliance on human programming. Traditional machine learning in robotics needs a lot of human supervision, but DeepMind’s method uses competitive robot-vs-robot table tennis to promote ongoing self-improvement. As each robot learns to outplay its opponent, both gradually develop their skills.

The team built an autonomous table-tennis setup for continuous training and found that while cooperative play produced stable rallies, competitive training posed challenges, including skill retention and adapting to varied shots. Playing against humans provided broader learning opportunities, allowing robots to reach amateur human-level performance—winning against beginners and half of matches with intermediate players.

DeepMind also tested vision-language models (VLMs) as AI coaches, using prompts to analyze and refine robot behavior without traditional reward functions. These techniques, researchers say, represent progress toward robots capable of independently learning complex skills for real-world applications.

Robot Workforce Story Of The Week

Code Explorers program brings robotics, computer science to Alabama’s youngest learners

Auburn University’s College of Sciences and Mathematics is helping Alabama’s early elementary teachers introduce computer science as early as kindergarten. Through Code Explorers, developed by the Southeastern Center of Robotics Education (SCORE), K-2 teachers receive training and tools to integrate robotics and coding into math and reading lessons.

Funded by the Alabama State Department of Education, the program supports statewide digital literacy and computer science standards, which have been harder to implement in lower grades. “Younger students haven’t had the same access to these standards—this program changes that,” said SCORE administrator Matthew Buckley. The response from first-year students has been overwhelmingly positive.

Robot Video Of The Week

In Stanford’s CS 123 course, students build “Pupper” robots entirely from scratch, then supercharge them with cutting-edge AI for their final projects. Past creations have included a Pupper that serves as a Stanford tour guide and even a tiny robotic firefighter, showcasing how hands-on learning and AI innovation can turn simple robots into creative, real-world problem solvers.

Upcoming Robot Events

Aug. 25-29 IEEE RO-MAN (Eindhoven, Netherlands)

Sept. 2-5 European Conference on Mobile Robots (Padua, Italy)

Sept. 3-5 ARM Institute Member Meetings (Pittsburgh, PA)

Sept. 15-17 ROSCon UK (Edinburgh)

Sept. 23 Humanoid Robot Forum (Seattle, WA)

Sept. 27-30 IEEE Conference on Robot Learning (Seoul, KR)

Sept. 30-Oct. 2 IEEE International Conference on Humanoid Robots (Seoul, KR)

Oct. 6-10 Intl. Conference on Advanced Manufacturing (Las Vegas, NV)

Oct. 15-16 RoboBusiness (Santa Clara, CA)

Oct. 19-25 IEEE IROS (Hangzhou, China)

Oct. 27-29 ROSCon (Singapore)

Nov. 3-5 Intl. Robot Safety Conference (Houston, TX)

Dec. 1-4 Intl. Conference on Space Robotics (Sendai, Japan)

Dec. 11-12 Humanoid Summit (Silicon Valley TBA)

Mar. 16-19 Intl. Conference on Human-Robot Interaction (Edinburgh, Scotland)