Why Robots Need Human Acceptance Testing (HAT) to Succeed

Without real-world testing for human interaction, robots risk public rejection—jeopardizing the future of automation in homes, streets, and public spaces.

Aaron’s Thoughts On The Week

“Trust is built with consistency.” — Lincoln Chafee

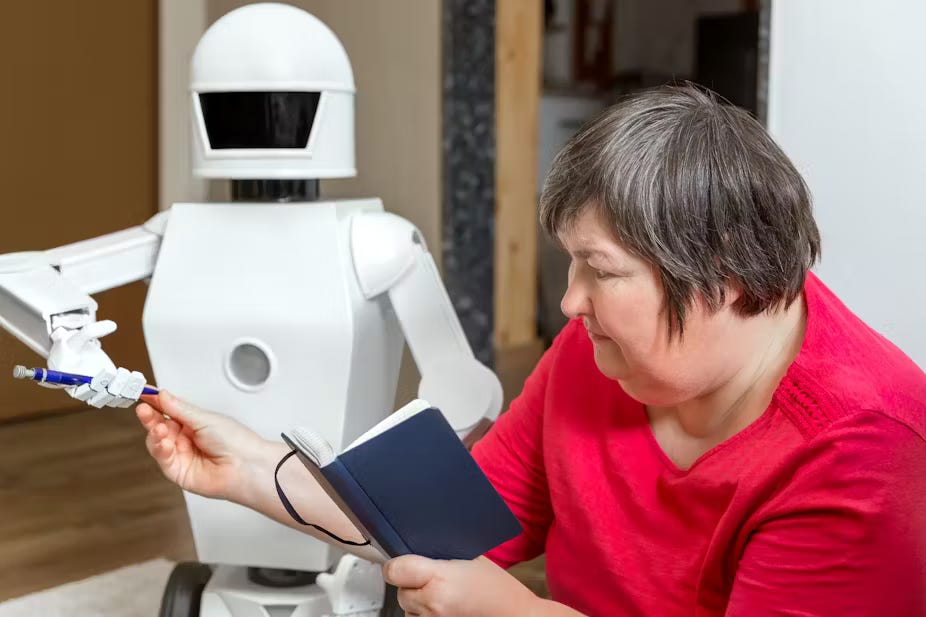

As robots move beyond the structured environments of factories and warehouses into our homes, streets, and public spaces, ensuring they operate safely, intuitively, and reliably around people is more critical than ever. In industrial settings, Factory Acceptance Testing (FAT) is a well-established step that verifies whether a robot meets performance and safety standards before being deployed. However, robots designed for human-facing roles—whether assisting in homes, delivering packages on sidewalks, or working in retail environments—require a different kind of validation. Their success depends on their ability to function mechanically and how well they interact with people, understand social norms, and gain human trust.

We now need a Human Acceptance Testing (HAT) framework to bridge this gap. Unlike FAT, which focuses on technical specifications, HAT would measure how robots adapt to real-world, unstructured environments, where they must navigate dynamic spaces, avoid obstacles, and interpret human behavior. A delivery robot on a sidewalk, for example, must recognize when to yield to a pedestrian, while a home assistant robot should be able to adjust to the unique preferences of its user. Without proper testing in these settings, a robot that works flawlessly in a lab may fail spectacularly in a real-world situation.

Challenges and Current State

The challenge of implementing HAT is significant. Human environments are unpredictable, with constantly shifting conditions, varying levels of user familiarity with technology, and potential privacy concerns. Robots in public must also comply with social expectations—something traditional engineering tests do not assess. A robot that moves too aggressively, stands too close, or behaves unnaturally could create discomfort or even fear, reducing adoption rates. Research already highlights these concerns, with studies on social robots emphasizing the need for tailored security and behavior standards as these machines integrate more deeply into everyday life.

Efforts to evaluate robots in human spaces are emerging. The RoboCup@Home competition, for instance, puts service robots through real-world tests designed to simulate household environments. Similarly, pilot programs in airports, hospitals, and retail settings provide valuable insights, but these remain ad hoc and limited in scale. Unlike industrial machines, which must pass standardized FAT procedures before deployment, consumer and public-facing robots lack a formalized process to test real-world readiness. Without structured validation frameworks, developers risk deploying robots that may function technically but fail to integrate seamlessly into human-centric spaces. Addressing this gap is crucial for broader adoption and trust in robotic systems.

Human Acceptance Testing (HAT) vs. User Acceptance Testing (UAT): Key Differences

At first glance, Human Acceptance Testing (HAT) and User Acceptance Testing (UAT) may seem similar, as both involve testing a product before deployment to ensure it meets user needs. However, they serve distinct purposes, particularly when applied to robots operating in public and home environments.

User Acceptance Testing (UAT): Ensuring Functional Requirements

UAT is a well-established practice in software development and traditional hardware deployment. End users typically conduct it to verify that a product functions as intended and meets specified business or operational requirements before final release. UAT primarily focuses on:

Ensuring the system works as expected based on predefined use cases.

Identifying bugs, usability issues, or workflow inefficiencies.

Verifying that the product aligns with customer needs and business goals.

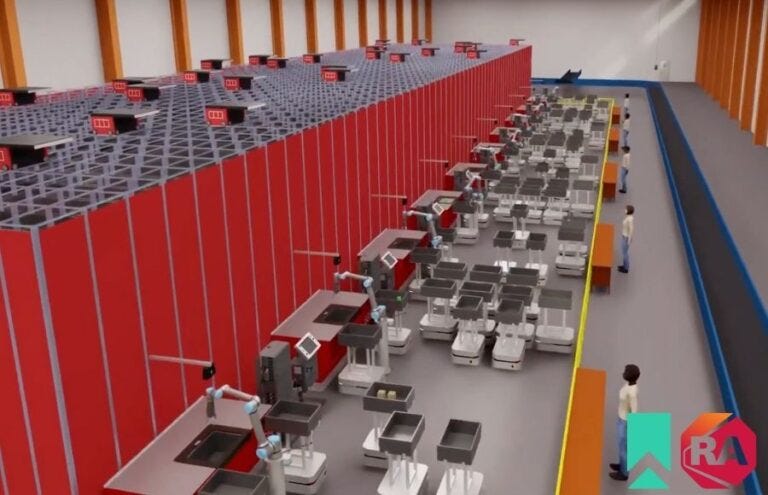

For example, in a robotic warehouse system, UAT might ensure that a robot correctly picks and places items according to company processes and integrates well with inventory management software.

Human Acceptance Testing (HAT): Ensuring Social and Environmental Readiness

HAT, on the other hand, is a newer concept tailored for robots interacting in human-centered environments. While UAT focuses on whether a system meets business needs, HAT assesses how well robots integrate into real-world human spaces. It considers:

Social Interaction: Does the robot behave in ways that make people feel safe and comfortable?

Environmental Adaptability: Can the robot function in unpredictable settings like homes, streets, or crowded public spaces?

Trust and Usability: Do humans intuitively understand how to interact with the robot?

Compliance with Social Norms: Does the robot respect personal space, pedestrian right-of-way, or household etiquette?

For example, a delivery robot undergoing UAT might be tested to ensure it follows navigation commands correctly. But under HAT, it would be tested to see how pedestrians react to it on a crowded sidewalk or if it can adjust its behavior based on human proximity.

Where Do We Start?

To make Human Acceptance Testing (HAT) as common as Factory Acceptance Testing (FAT) and User Acceptance Testing (UAT), several key steps must be taken across industry, academia, and standards organizations. Some of these items are starting to be implemented in some areas and by some key organizations.

Industry Adoption: Incentivizing Companies to Implement HAT

Robot firms need clear incentives to prioritize HAT. This could come in the form of:

Regulatory requirements: Governments could mandate HAT for public-facing robots, similar to safety regulations for autonomous vehicles.

Market-driven pressures: Consumer and business buyers may demand HAT as a competitive differentiator for usability and safety.

Insurance & liability considerations: Insurers could require HAT to mitigate risks for companies deploying public-facing robots.

Standards Organizations: Writing Formal HAT Guidelines

For HAT to become mainstream, standards organizations such as ISO, IEEE, and ASTM International need to develop formal guidelines for testing human-robot interaction in public and home environments. Possible steps include:

Creating a technical standard that defines HAT protocols, similar to ISO 10218 for industrial robots.

Expanding existing safety standards (like ISO 13482 for personal care robots) to include human acceptance metrics.

Partnering with industry and academia to run real-world pilot programs validating HAT frameworks.

Academic Research: Building the Scientific Basis for HAT

More research is needed to define HAT best practices. Universities and robotics labs could:

Conduct large-scale user studies to analyze human reactions to robots in public spaces.

Develop benchmark tests for evaluating human trust, safety, and adaptability.

Work with industry partners to pilot real-world HAT trials across different robotic applications.

Starting Points for Implementation

My roadmap would be the following and we are seeing progress in some of these suggestions. The early promise these are showing is why I am suggesting them.

1️⃣ Pilot programs: Companies can start small-scale HAT trials for delivery robots, home assistants, and autonomous service bots.

2️⃣ Industry-academia partnerships: Collaborative research between robotics firms and universities can produce standardized HAT evaluation frameworks.

3️⃣ Policy discussions: Engaging with regulators and safety organizations can lay the groundwork for future HAT regulations.

The road to making HAT a universal standard requires cross-sector collaboration, but as robots become more integrated into daily life, this shift is inevitable. The key is starting now—before public resistance slows adoption.

Examples of real-world robot failures that highlight the need for HAT

As robots increasingly integrate into public spaces and homes, several real-world incidents underscore the critical need for Human Acceptance Testing (HAT) to ensure safety, reliability, and public trust.

Security Robots in Public Spaces

In July 2016, a Knightscope K5 security robot collided with a 16-month-old toddler at the Stanford Shopping Center in Palo Alto, California, causing minor injuries. The shopping center temporarily suspended the robot's operations following the incident. Additionally, in 2017, a K5 robot at Washington Harbour in Washington, D.C., malfunctioned and fell into a water fountain, raising concerns about the reliability of autonomous security devices. This incident was highlighted in an earlier Six Degrees of Robotics column.

HitchBOT's Demise

HitchBOT, a Canadian hitchhiking robot designed to study human-robot interactions, successfully traversed Canada, Germany, and the Netherlands. However, in 2015, during its U.S. journey, HitchBOT was vandalized and destroyed in Philadelphia, highlighting challenges in protecting robots in uncontrolled public environments.

Home Robot Vulnerabilities

In October 2024, reports emerged of Ecovacs Deebot X2 robot vacuums being hacked to emit racial slurs and chase pets, alarming homeowners and exposing significant security flaws in consumer robotics. Similarly, a viral video showcased a robot vacuum smearing pet feces across a home, illustrating the limitations of current obstacle recognition systems in domestic robots.

Delivery Robot Incidents

In September 2023, a Starship Technologies delivery robot at Arizona State University injured a staff member by reversing into them, causing physical harm and raising questions about the safety protocols of autonomous delivery systems.

These incidents emphasize the necessity for comprehensive Human Acceptance Testing (HAT) to evaluate not only the technical performance of robots but also their interactions with humans and adaptability to real-world environments. Implementing HAT can help prevent such failures, ensuring that robotic technologies are both safe and accepted by the public.

Conclusion: The Urgent Need for Human Acceptance Testing (HAT)

As robots become part of everyday life—navigating our streets, assisting in our homes, and integrating into public spaces—their success hinges not just on their technical capabilities, but on how well they are accepted by people. While Factory Acceptance Testing (FAT) ensures a robot functions properly and User Acceptance Testing (UAT) verifies it meets business needs, neither evaluates how a robot interacts with real people in unpredictable environments. That is where Human Acceptance Testing (HAT) must step in.

Without a structured HAT framework, we risk deploying robots that fail in the real world—not because they don’t work mechanically, but because they don’t work for people. Incidents like security robots knocking over pedestrians, delivery bots blocking sidewalks, and home robots getting hacked highlight the growing disconnect between technology and human expectations. These failures do more than cause temporary disruptions; they erode public trust and fuel resistance to robotics adoption.

If companies and policymakers fail to prioritize HAT now, public skepticism will only grow, making future deployments even more challenging. Resistance to automation in retail, food service, healthcare, and public transit has already begun, often fueled by bad experiences with early-stage robots that weren’t fully tested for human interaction. Without trust, robotics adoption will stall, regulations will tighten, and the industry will face steeper challenges in proving its value.

To prevent this, robotics firms must see HAT as essential, not optional. Standards organizations must formalize guidelines, and academic researchers must provide the data to shape best practices. If we act now, we can ensure robots enter our world in a way that fosters trust and enhances human life—rather than becoming a source of frustration, fear, or backlash.

Robot News Of The Week

RightHand Robotics receives strategic investment from Rockwell Automation

RightHand Robotics, a leader in robotic piece-picking, has secured a strategic investment from Rockwell Automation. This backing provides financial stability and industry credibility to scale its RightPick 4 technology, which uses AI, computer vision, and robotic grippers for automated item picking. CEO Yaro Tenzer aims to expand operations in Europe and North America. Rockwell’s integration expertise and global network will accelerate adoption and profitability. RightHand Robotics has raised over $120M since its founding and continues pushing warehouse automation's boundaries. Tenzer will discuss “lights-out” fulfillment at the Robotics Summit & Expo in Boston this April.

Alibaba-backed robotics firm completes $69m series A

LimX Dynamics, a leader in humanoid and robotic technology, has raised 500 million yuan (US$69.02 million) in its Series A+ funding over the past six months. The round saw support from industrial players, financial institutions, and existing investors, including Alibaba Group. In December 2024, the company showcased a full-size humanoid robot with a 360° rotating hip joint for enhanced flexibility, capable of basic movements like squatting and standing. LimX Dynamics continues to advance both bipedal and quadruped robotic systems, pushing the boundaries of robotics innovation.

UKAEA, F-REI Ink Robotics Research Partnership

The UK Atomic Energy Authority (UKAEA) and the Fukushima Institute for Research, Education and Innovation (F-REI) have signed a memorandum of cooperation (MOC) to advance research in robotics and autonomous systems. The collaboration focuses on nuclear decommissioning, facility management, and talent development, strengthening UK-Japan ties in science and innovation. UKAEA's robotics expertise, including its RACE facility, complements F-REI’s mission to drive Japan’s technological growth. Signed on March 4, 2025, at UKAEA’s Culham Campus, the MOC aims to accelerate advancements with real-world impact in both fusion energy and Fukushima’s reconstruction efforts.

Robot Research In The News

AI researchers launch AnySense app for gathering visual training data

Researchers at NYU’s GRAIL Lab have launched AnySense, an iOS app designed for gathering visual training data for robotics models. It supports Robot Utility Models (RUM), which enable robots to perform tasks in unseen environments without extensive training.

To aid data collection, the team developed “The Stick,” a 3D-printed, iPhone-powered gripper, and AnySkin, a tactile sensor for measuring contact force. AnySense integrates iPhone sensors with external inputs and is open-source for the robotics community. Available now on the Apple App Store, it aims to improve multi-sensory learning in robotics.

Sony's Aibo dog could soon walk quietly and perform elaborate dance routines

Sony’s Aibo robot puppy can already walk, respond to calls, play, and cuddle, but researchers at ETH Zurich and Sony have developed reinforcement learning (RL) models to improve its abilities further.

One model reduces footstep noise, making Aibo’s movement quieter by adjusting joint stiffness and damping based on sensor data. Tests showed it significantly outperforms Sony’s existing controllers.

Another model, Deep Fourier Mimic (DFM), enhances Aibo’s dance skills, creating smoother, more expressive movements that allow it to interact with users dynamically.

These advances could improve home robots and theme park entertainment systems in the future.

Robot Workforce Story Of The Week

Trump’s war on DEI comes for programs helping autistic students find jobs in STEM

A Vanderbilt University program helping autistic students enter STEM careers faces an existential threat due to the Trump administration’s DEI restrictions. The Frist Center for Autism and Innovation, led by physicist Keivan Stassun, was set to receive $7.5M in NSF grants to expand neurodivergent inclusion in STEM. Still, these funds were suspended due to new executive orders targeting DEI programs.

Stassun, a MacArthur “Genius Grant” recipient, says the cuts will force layoffs, including autistic staff, and halt critical workforce development. The center, which received support from top engineering schools and major corporations, aimed to fill talent gaps in AI, cybersecurity, and engineering.

Despite its non-political focus, the program was flagged for using terms like “neurodiversity” and “inclusion.” The National Science Foundation says it is reviewing grants to ensure compliance with federal orders. Stassun warns that the cuts waste valuable talent and undermine America’s STEM workforce.

Robot Video Of The Week

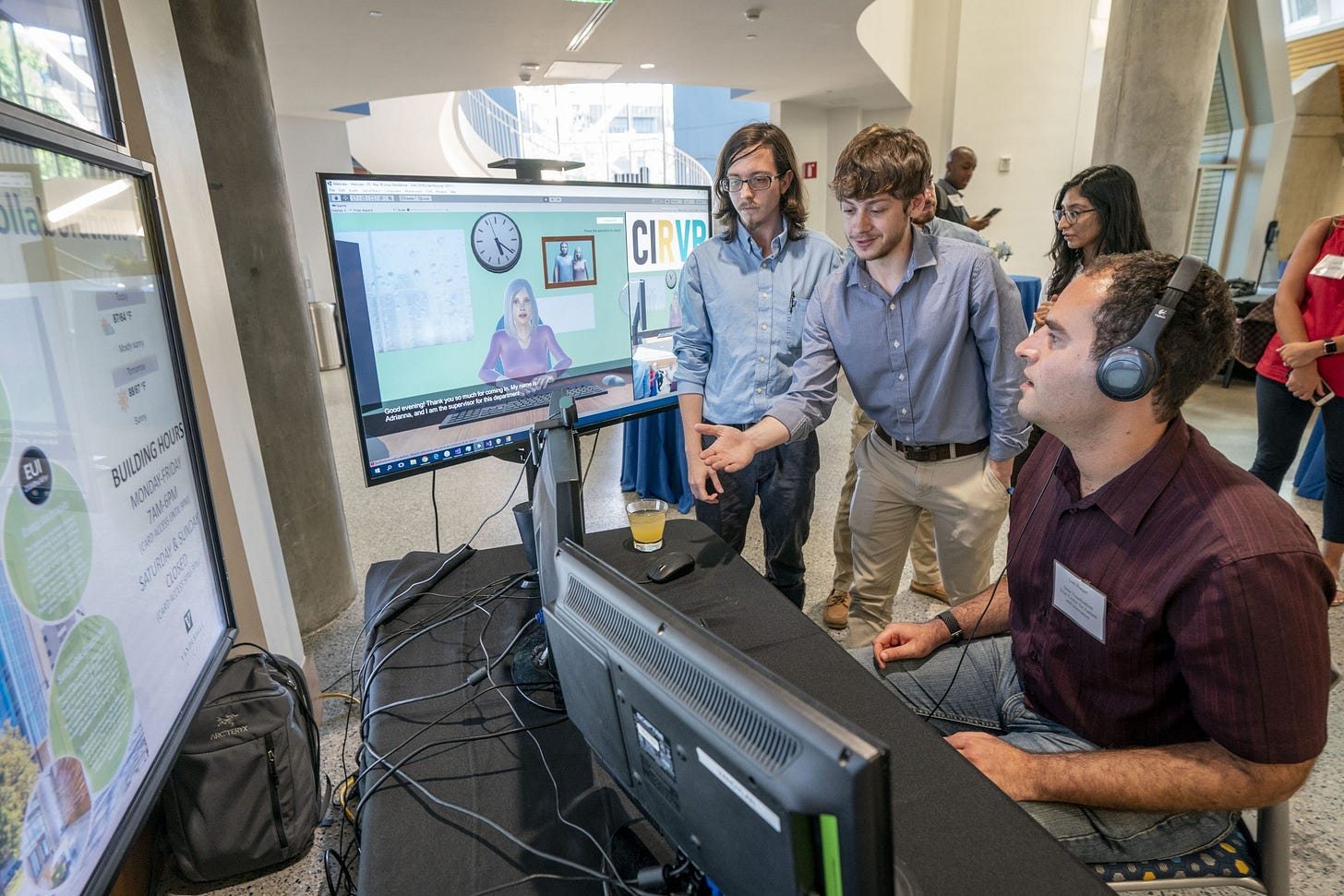

Researchers at the University of Washington’s Personal Robotics Lab developed ADA, a robotic arm that helps people with motor impairments feed themselves. Tested outside the lab, ADA successfully fed users with 80% accuracy and adapted to real-world dining environments. The team aims to enhance customizability for greater independence.

Upcoming Robot Events

Mar. 17-21 NVIDIA GTC AI Conference (San Josa, CA)

Mar. 21-23 Intl. Conference on Robotics and Intelligent Technology (Macau)

Mar. 25-27 European Robotics Forum (Stuttgart)

Apr. 23-26 RoboSoft (Lausanne, Switzerland)

Apr. 30-May 1 Robotics Summit (Boston, MA)

May 12-15 Automate (Detroit, MI)

May 17-23 ICRA 2025 (Atlanta, GA)

May 18-21 Intl. Electric Machines and Drives Conference (Houston, TX)

May 20-21 Robotics & Automation Conference (Tel Aviv)

May 29-30 Humanoid Summit - London

June 30-July 2 International Conference on Ubiquitous Robots (College Station, TX)

Aug. 18-22 Intl. Conference on Automation Science & Engineering (Anaheim, CA)

Oct. 6-10 Intl. Conference on Advanced Manufacturing (Las Vegas, NV)

Oct. 15-16 RoboBusiness (Santa Clara, CA)

Oct. 19-25 IEEE IROS (Hangzhou, China)

Nov. 3-5 Intl. Robot Safety Conference (Houston, TX)

Dec. 11-12 Humanoid Summit (Silicon Valley TBA)